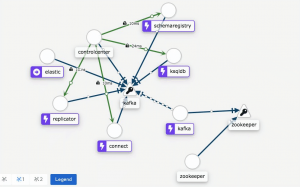

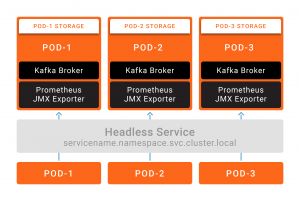

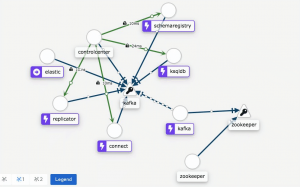

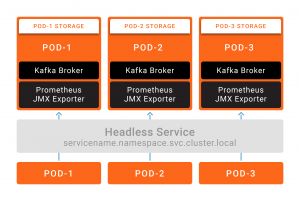

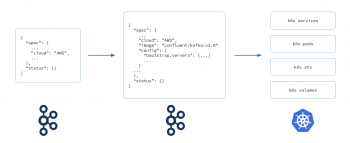

Replace a period (.) If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial.

Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Clients.

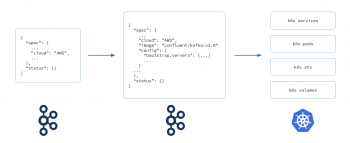

The example Kafka use cases above could also be considered Confluent Platform use cases. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials Pulls 100M+ Overview Tags. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. This page includes the following topics: Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. This page will help you in getting started with Kafka Connect. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. Convert to upper-case. Pulls 100M+ Overview Tags. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. This page will help you in getting started with Kafka Connect. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Convert to upper-case. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . The following functionality is currently exposed and available through Confluent REST APIs. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. How Confluent Platform fits in. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. with a single underscore (_). Kafka Connect 101 is also a free course you can check out before moving ahead.. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. such as Kubernetes and Confluent Operator. Official Confluent Docker Image for Kafka (Community Version) Container. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Docker, Mesos, and Kubernetes, Oh My! Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Clients. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. The following functionality is currently exposed and available through Confluent REST APIs. Docker, Mesos, and Kubernetes, Oh My! Official Confluent Docker Image for Kafka (Community Version) Container. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_.

Confluent also has a Kafka-based distribution with added security, tiered storage and more. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Step 2: Create Kafka topics for storing your data. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Docker, Mesos, and Kubernetes, Oh My! In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and ACL concepts. such as Kubernetes and Confluent Operator. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod.

Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Community Docker Image for Apache Kafka. Josh Software, part of a project in India to house more than 100,000 people in affordable smart homes, pushes data from millions of sensors to Kafka, processes it in Apache Spark, and writes the results to MongoDB, which connects the operational and analytical data sets.By streaming data from millions of sensors in near real-time, the project is creating truly smart homes, and These ease cluster management significantly. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Confluent Community Docker Image for Apache Kafka. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. How Confluent Platform fits in. How to use Kafka Connect - Getting Started. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data Download HiveMQ Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. Pulsar has a heavy-weight architecture that is the most complex in this comparison. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Kafka Cluster. Kafka Cluster. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data How to use Kafka Connect - Getting Started.

Download HiveMQ About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Replace a period (.) This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. 1. Download HiveMQ How Confluent Platform fits in. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Convert to upper-case. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Docker image for deploying a

Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Features. such as Kubernetes and Confluent Operator. These ease cluster management significantly. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This page includes the following topics:

Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. Separate each word with _. Kafka Cluster. ACL concepts. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. These ease cluster management significantly. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. ACL concepts. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Easily build robust, reactive data pipelines that stream events between applications and services in real time. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. 1. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. This page will help you in getting started with Kafka Connect. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Step 2: Create Kafka topics for storing your data. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Step 2: Create Kafka topics for storing your data. Easily build robust, reactive data pipelines that stream events between applications and services in real time. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. Replace a period (.) Kafka Connect 101 is also a free course you can check out before moving ahead.. with a single underscore (_). Separate each word with _. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. How to use Kafka Connect - Getting Started. Kafka Connect 101 is also a free course you can check out before moving ahead.. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. The example Kafka use cases above could also be considered Confluent Platform use cases. This page includes the following topics:

To see examples of consumers written in various languages, refer to the specific language sections. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here.

Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. 1. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. The following functionality is currently exposed and available through Confluent REST APIs. The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. The example Kafka use cases above could also be considered Confluent Platform use cases. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. Docker image for deploying a Separate each word with _. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Clients. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. with a single underscore (_).

Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Clients.

Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Clients.  The example Kafka use cases above could also be considered Confluent Platform use cases. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials Pulls 100M+ Overview Tags. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. This page includes the following topics: Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. This page will help you in getting started with Kafka Connect. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. Convert to upper-case. Pulls 100M+ Overview Tags. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. This page will help you in getting started with Kafka Connect. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Convert to upper-case. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . The following functionality is currently exposed and available through Confluent REST APIs. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. How Confluent Platform fits in. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. with a single underscore (_). Kafka Connect 101 is also a free course you can check out before moving ahead.. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. such as Kubernetes and Confluent Operator. Official Confluent Docker Image for Kafka (Community Version) Container. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Docker, Mesos, and Kubernetes, Oh My! Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Clients. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. The following functionality is currently exposed and available through Confluent REST APIs. Docker, Mesos, and Kubernetes, Oh My! Official Confluent Docker Image for Kafka (Community Version) Container. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_.

The example Kafka use cases above could also be considered Confluent Platform use cases. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials Pulls 100M+ Overview Tags. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. This page includes the following topics: Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. This page will help you in getting started with Kafka Connect. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. Convert to upper-case. Pulls 100M+ Overview Tags. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. This page will help you in getting started with Kafka Connect. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Convert to upper-case. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . The following functionality is currently exposed and available through Confluent REST APIs. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. How Confluent Platform fits in. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. with a single underscore (_). Kafka Connect 101 is also a free course you can check out before moving ahead.. ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. such as Kubernetes and Confluent Operator. Official Confluent Docker Image for Kafka (Community Version) Container. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Docker, Mesos, and Kubernetes, Oh My! Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Clients. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. The following functionality is currently exposed and available through Confluent REST APIs. Docker, Mesos, and Kubernetes, Oh My! Official Confluent Docker Image for Kafka (Community Version) Container. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_.  Confluent also has a Kafka-based distribution with added security, tiered storage and more. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Step 2: Create Kafka topics for storing your data. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Docker, Mesos, and Kubernetes, Oh My! In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and ACL concepts. such as Kubernetes and Confluent Operator. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod.

Confluent also has a Kafka-based distribution with added security, tiered storage and more. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Step 2: Create Kafka topics for storing your data. To see a comprehensive list of supported clients, refer to the Clients section under Supported Versions and Interoperability . Docker, Mesos, and Kubernetes, Oh My! In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file ; Producers - Instead of exposing producer objects, the API accepts produce requests targeted at specific topics or partitions and ACL concepts. such as Kubernetes and Confluent Operator. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod.  Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Community Docker Image for Apache Kafka. Josh Software, part of a project in India to house more than 100,000 people in affordable smart homes, pushes data from millions of sensors to Kafka, processes it in Apache Spark, and writes the results to MongoDB, which connects the operational and analytical data sets.By streaming data from millions of sensors in near real-time, the project is creating truly smart homes, and These ease cluster management significantly. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Confluent Community Docker Image for Apache Kafka. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. How Confluent Platform fits in. How to use Kafka Connect - Getting Started. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data Download HiveMQ Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. Pulsar has a heavy-weight architecture that is the most complex in this comparison. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Kafka Cluster. Kafka Cluster. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data How to use Kafka Connect - Getting Started.

Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Community Docker Image for Apache Kafka. Josh Software, part of a project in India to house more than 100,000 people in affordable smart homes, pushes data from millions of sensors to Kafka, processes it in Apache Spark, and writes the results to MongoDB, which connects the operational and analytical data sets.By streaming data from millions of sensors in near real-time, the project is creating truly smart homes, and These ease cluster management significantly. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. For Confluent Control Center stream monitoring to work with Kafka Connect, you must configure SASL/PLAIN for the Confluent Monitoring Interceptors in Kafka Connect. Confluent Community Docker Image for Apache Kafka. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. How Confluent Platform fits in. How to use Kafka Connect - Getting Started. About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data Download HiveMQ Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. Pulsar has a heavy-weight architecture that is the most complex in this comparison. In cloud deployments that leverage managed services like Confluent Cloud, such infrastructure teams are not usually required. Kafka Cluster. Kafka Cluster. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. In this step, you create two topics by using Confluent Control Center.Control Center provides the features for building and monitoring production data How to use Kafka Connect - Getting Started.  Download HiveMQ About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Replace a period (.) This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. 1. Download HiveMQ How Confluent Platform fits in. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Convert to upper-case. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Docker image for deploying a

Download HiveMQ About; //localhost:9092 --link zookeeper:zookeeper confluent/kafka user2563451. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. Apache Kafka on Kubernetes, Microsoft Azure, and ZooKeeper with Lena Hall. Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Replace a period (.) This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. 1. Download HiveMQ How Confluent Platform fits in. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file Download HiveMQ and youre ready to try our reliable, scalable and fast MQTT broker. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters : GKE to Cloud: N: Y: Uses Google Kubernetes Engine, Confluent Cloud, and Confluent Replicator to explore a multicloud deployment : DevOps for Apache Kafka with Kubernetes and GitOps: N: N Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Convert to upper-case. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Docker image for deploying a  Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Features. such as Kubernetes and Confluent Operator. These ease cluster management significantly. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This page includes the following topics:

Built and operated by the original creators of Apache Kafka, Confluent Cloud provides a simple, scalable, resilient, and secure event streaming platform for the cloud-first enterprise, the DevOps-starved organization, or the agile developer on a mission. Features. such as Kubernetes and Confluent Operator. These ease cluster management significantly. In the following configuration example, the underlying assumption is that client authentication is required by the broker so that you can store it in a client properties file In Confluent Platform, realtime streaming events are stored in a Kafka topic, which is essentially an append-only log.For more info, see the Apache Kafka Introduction.. If you are using the Kafka Streams API, you can read on how to configure equivalent SSL and SASL parameters. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This page includes the following topics:  Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. Separate each word with _. Kafka Cluster. ACL concepts. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. These ease cluster management significantly. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. ACL concepts. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Easily build robust, reactive data pipelines that stream events between applications and services in real time. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. 1. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. This page will help you in getting started with Kafka Connect. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Step 2: Create Kafka topics for storing your data. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Step 2: Create Kafka topics for storing your data. Easily build robust, reactive data pipelines that stream events between applications and services in real time. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. Replace a period (.) Kafka Connect 101 is also a free course you can check out before moving ahead.. with a single underscore (_). Separate each word with _. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. How to use Kafka Connect - Getting Started. Kafka Connect 101 is also a free course you can check out before moving ahead.. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. The example Kafka use cases above could also be considered Confluent Platform use cases. This page includes the following topics:

Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. Separate each word with _. Kafka Cluster. ACL concepts. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafkas server-side cluster technology. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. For the Confluent Replicator image (cp-enterprise-replicator), convert the property variables as following and use them as environment variables: Prefix with CONNECT_. Kafka Streams Overview Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in an Apache Kafka cluster. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. These ease cluster management significantly. Confluent Replicator is a Kafka connector and runs on a Kafka Connect cluster. Confluent provides a truly cloud-native experience, completing Kafka with a holistic set of enterprise-grade features to unleash developer productivity, operate efficiently at scale, and meet all of your architectural requirements before moving to production. ACL concepts. Confluent also has a Kafka-based distribution with added security, tiered storage and more. Apache Kafka uses ZooKeeper to store persistent cluster metadata and is a critical component of the Confluent Platform deployment. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials The new Producer and Consumer clients support security for Kafka versions 0.9.0 and higher. Beginning with Confluent Platform version 6.0, Kafka Connect can automatically create topics for source connectors if the topics do not exist on the Apache Kafka broker. Easily build robust, reactive data pipelines that stream events between applications and services in real time. Kubernetes pod resolve external kafka hostname in coredns not as hostaliases inside pod. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The Kafka ecosystem includes two different mature Kubernetes operators, one open source and one commercial. 1. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. This page will help you in getting started with Kafka Connect. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Step 2: Create Kafka topics for storing your data. When you sign up for Confluent Cloud, apply promo code C50INTEG to receive an additional $50 free usage ().From the Console, click on LEARN to provision a cluster and click on Clients to get the cluster-specific configurations and credentials To see examples of consumers written in various languages, refer to the specific language sections. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Step 2: Create Kafka topics for storing your data. Easily build robust, reactive data pipelines that stream events between applications and services in real time. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. Replace a period (.) Kafka Connect 101 is also a free course you can check out before moving ahead.. with a single underscore (_). Separate each word with _. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. How to use Kafka Connect - Getting Started. Kafka Connect 101 is also a free course you can check out before moving ahead.. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. The example Kafka use cases above could also be considered Confluent Platform use cases. This page includes the following topics:  To see examples of consumers written in various languages, refer to the specific language sections. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here.

To see examples of consumers written in various languages, refer to the specific language sections. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Metadata - Most metadata about the cluster brokers, topics, partitions, and configs can be read using GET requests for the corresponding URLs. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here.  Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. 1. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. The following functionality is currently exposed and available through Confluent REST APIs. The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. The example Kafka use cases above could also be considered Confluent Platform use cases. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. Docker image for deploying a Separate each word with _. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Clients. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. with a single underscore (_).

Configure the Connect workers by adding these properties in connect-distributed.properties , depending on whether the connectors are sources or sinks. Kafka Consumer Confluent Platform includes the Java consumer shipped with Apache Kafka. The server side (Kafka broker, ZooKeeper, and Confluent Schema Registry) can be separated from the business applications. 1. One of the reasons that separating out packaging and deployment from the stream processing framework is so important is because packaging and deployment is an area undergoing its own renaissance. The following functionality is currently exposed and available through Confluent REST APIs. The official MongoDB Connector for Apache Kafka is developed and supported by MongoDB engineers and verified by Confluent.The Connector enables MongoDB to be configured as both a sink and a source for Apache Kafka.. The example Kafka use cases above could also be considered Confluent Platform use cases. I create succesfully a topic from kafka: ./bin/kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --Stack Overflow. Docker image for deploying a Separate each word with _. Before attempting to create and use ACLs, familiarize yourself with the concepts described in this section; your understanding of them is key to your success when creating and using ACLs to manage access to components and cluster data. This section gives a high-level overview of how the consumer works and an introduction to the configuration settings for tuning. Confluent Platform includes client libraries for multiple languages that provide both low-level access to Apache Kafka and higher level stream processing. Confluent recommends you read and understand Kafka Connect Concepts before moving ahead. Access Control Lists (ACLs) provide important authorization controls for your enterprises Apache Kafka cluster data. The easiest way to follow this tutorial is with Confluent Cloud because you dont have to run a local Kafka cluster. Clients. Pulsar has a heavy-weight architecture that is the most complex in this comparison. Features. with a single underscore (_).